Most support teams know their CSAT scores. Fewer know why those scores drop—or what to do about it before customers churn.

The problem isn’t lack of feedback. It’s the gap between collecting feedback and acting on it. By the time a low CSAT score shows up in a monthly report, the dissatisfied customer has already told three friends, posted a review, or switched to a competitor.

CSAT improvement software closes this gap. It connects feedback collection, analysis, and response in one system—turning CSAT from a backward-looking report into a real-time operating signal. When implemented correctly, it reduces response time, improves consistency, and raises CSAT scores at scale without adding headcount.

Основные выводы

- CSAT improvement software connects feedback collection, analysis, and action in one system—reducing average response time from days to hours and ensuring every customer receives consistent follow-up, not just the ones who complain loudest.

- Teams struggle with CSAT not because of effort, but because feedback is fragmented, delayed, and poorly contextualized.

- The biggest CSAT gains come from automating surveys at key moments—immediately after ticket resolution, not three days later—rather than blasting customers with survey fatigue. Timing beats volume.

- Analytics and segmentation explain why scores drop, not just that they drop.

- Workflow automation is critical for acting on negative CSAT before churn happens.

- Software improves visibility and execution, but it cannot fix broken products or weak customer culture.

- Choosing the right tool depends on your CSAT goals, not feature volume.

The CSAT Challenge Teams Face Today

Most teams don’t struggle with collecting CSAT. They struggle with improving it.

As companies scale, CSAT often plateaus. Hiring more agents or sending more surveys stops working. The underlying issues are structural, not effort-related.

After working with dozens of support operations, I’ve seen the same patterns that prevent CSAT improvement:

Feedback lives in silos. Customer satisfaction data scatters across email, chat transcripts, in-app surveys, app store reviews, and social media. A customer might rate support 4/5 via email survey but post a 2-star review on G2 the same day. Without centralized visibility, teams miss the full picture.

Surveys arrive too late. Many teams send CSAT surveys three days after ticket closure—by which time the customer has forgotten details or already formed a lasting impression. Memory fades, accuracy drops, and the chance to recover a poor experience disappears.

Low scores lack context. A CSAT score of 2/5 tells you someone is unhappy. It doesn’t tell you whether the issue was agent behavior, product functionality, unclear documentation, or unrealistic customer expectations. Without context, teams guess at fixes.

Reports are manual and slow. Support managers spend hours each week pulling data from multiple tools, building spreadsheets, and trying to identify trends. By the time patterns emerge, they’re already weeks old.

Negative feedback gets acknowledged but not resolved. A customer complains, someone responds with ‘Thanks for the feedback,’ and nothing changes. The customer feels unheard. The problem persists for the next customer.

This creates a dangerous gap between knowing customers are unhappy и knowing what to fix.

The impact shows up in metrics and customer behavior:

- Churn risk multiplies: Customers who report dissatisfaction and receive no response within 24 hours are 3-4x more likely to cancel than those who get same-day follow-up.

- Trust erodes gradually: Repeated issues—even minor ones—compound over time. A customer who encounters the same problem three times will often leave after the third occurrence, regardless of how well you handle each individual incident.

- Teams operate reactively: Support managers spend 60-70% of their time responding to escalations and fires, leaving only 30-40% for proactive improvements that could prevent those fires.

From experience, CSAT rarely improves sustainably without changing how feedback flows through the organization. This is where CSAT improvement software becomes essential—not as another tool, but as operational infrastructure.

What Is CSAT Improvement Software?

CSAT improvement software is the infrastructure that turns customer satisfaction from a score you track into a system you manage.

Think of it like this: basic survey tools are thermometers—they tell you the temperature. CSAT improvement software is the entire HVAC system—it measures temperature, diagnoses why it’s wrong, adjusts settings automatically, and confirms the fix worked.

Specifically, it helps teams:

Collect feedback at the right moment. Instead of scheduling surveys randomly, the software triggers them immediately after key interactions—when customer memory is fresh and context is clear. A survey sent 30 minutes after ticket closure gets 3-5x higher response rates than one sent three days later.

Analyze scores with context. A score of 3/5 means different things if it came from a new user struggling with onboarding versus an enterprise customer reporting a bug. The software segments by user type, interaction channel, agent, and journey stage—so you understand the ‘why’ behind the number.

Trigger actions when satisfaction drops. When a customer rates their experience below 3/5, the software can automatically create a task, assign it to the right team member, set a deadline, and attach the full conversation history—all within seconds.

Track whether issues are resolved. After a team responds to negative feedback, the software can send a follow-up survey to confirm the customer feels heard and the problem is actually fixed—closing the loop instead of leaving it open.

Unlike basic survey tools, these platforms focus on execution, not just data.

The core loop looks like this:

- Collect: Automated CSAT surveys triggered after key interactions.

- Analyze: Dashboards show trends, segments, and drivers behind scores.

- Act: Workflows route negative feedback to the right team fast.

- Close the loop: Customers are followed up with and outcomes are tracked.

When this loop runs continuously, CSAT becomes a leading indicator, not a lagging metric.

Most modern CSAT improvement software integrates with help desks, CRMs, product analytics, and messaging tools. This gives teams the missing context: who the customer is, what they did, and where the experience broke.

CSAT Improvement Software vs Traditional Feedback Tools

| Аспект | Traditional Feedback Tools | CSAT Improvement Software |

|---|---|---|

| Focus | Сбор данных | Data + action |

| Сроки | Periodic surveys | Real-time, trigger-based |

| Context | Ограниченный | User, channel, journey-level |

| Response | Manual | Automated workflows |

| Результат | Reports | Measurable CSAT improvement |

How CSAT Improvement Software Works Across the Customer Journey

CSAT doesn’t drop randomly. It drops at specific moments in the customer journey.

CSAT improvement software maps feedback to those moments and reacts immediately.

Here’s how this works in practice with a real customer journey:

Scenario: Sarah contacts support about a billing error

Step 1 – Touchpoint detection:

Sarah’s support ticket is marked ‘Resolved’ by the agent. The CSAT system detects this closure event automatically—no manual trigger needed.

Step 2 – Survey trigger:

Within 5 minutes, Sarah receives a 2-question survey via email: ‘How satisfied were you with this support interaction? (1-5)’ and ‘What could we improve?’

Step 3 – Context enrichment:

Sarah rates the experience 2/5 and writes: ‘Agent was polite but couldn’t explain why I was double-charged.’ The system automatically attaches:

- Sarah’s account details (Premium plan, customer for 14 months)

- The full ticket conversation and resolution notes

- Which agent handled the case (Agent ID: Mike T.)

- Channel used (email support)

- Resolution time (48 hours)

Step 4 – Analysis:

The dashboard updates immediately, flagging this as a low-score incident. The system notes this is the third billing-related ticket rated ≤3/5 this week—indicating a pattern, not isolated issue.

Step 5 – Action:

Within 2 minutes, an alert goes to:

- The support manager (for agent coaching check)

- The billing team lead (for process review)

- Sarah’s account manager (for customer recovery)

Each receives a task with the full context, a 24-hour SLA, and suggested next steps.

Step 6 – Follow-up:

The billing team discovers a payment gateway bug affecting recurring charges. They fix Sarah’s bill and email her within 4 hours. Three days later, the system sends a follow-up survey: ‘Did we resolve your concern?’ Sarah responds 5/5.

Результат: Instead of losing Sarah as a customer, the company:

- Retained a $1,200/year account

- Identified a systemic billing bug affecting 40+ customers

- Provided coaching data for the support team

- Closed the feedback loop with confirmation

This entire flow ran automatically—no manual coordination needed.

This turns CSAT from a monthly report into a daily operating signal.

Key Moments Where Software Impacts CSAT Most

- Post-support interactions: Immediate surveys reveal agent quality, resolution clarity, and friction points.

- Onboarding milestones: Early dissatisfaction predicts churn later if ignored.

- Feature adoption moments: Confusing or broken workflows surface quickly through in-app CSAT.

- Incident recovery: Automated follow-ups help rebuild trust after outages or bugs.

Example from practice: triggering CSAT right after first ticket resolution often exposes onboarding gaps, not support issues. Without software, that insight is usually missed.

Problem-to-Outcome Mapping: How Software Directly Improves CSAT

Slow or Inconsistent Feedback → Automated CSAT Surveys

Manual surveys depend on humans remembering to send them—and humans are inconsistent under pressure.

Here’s what typically happens: A support agent closes 40 tickets in a day. At the end of their shift, they’re supposed to manually send CSAT surveys for each one. But they’re exhausted, three tickets are escalated, and their manager needs a report by 5 PM.

Result? Maybe 15 surveys get sent. The other 25 customers never receive one. Even worse, the 15 who do get surveyed receive them 8 hours after the interaction—when memory has faded and context is lost.

The Automated Solution:

CSAT improvement software removes human dependency by triggering surveys based on events, not schedules.

Effective automation practices:

Trigger immediately after key moments. The system sends a survey within 5-30 minutes of ticket closure or chat end—while the experience is still vivid in the customer’s mind. Research shows survey response rates drop 40-60% when delayed beyond 2 hours.

Limit to one clear question. Instead of 10-question surveys that customers abandon, automated systems ask one primary question: ‘How satisfied were you with this interaction?’ Optional follow-up: ‘What could we improve?’ This increases completion rates from 15-20% (long surveys) to 35-50% (short surveys).

Use channel-appropriate delivery. If a customer contacted you via chat, send the survey in-app. If they emailed, respond via email. If they called, send an SMS. Matching the channel reduces friction and improves response rates by 20-30%.

Влияние на бизнес:

This automation improves two critical metrics:

- Response volume: From 30-40% of interactions surveyed (manual) to 95-100% (automated)

- Data freshness: From 8-24 hour delays to real-time feedback

Faster, more complete feedback means teams can identify and fix problems the same day they occur—instead of discovering them weeks later in aggregated reports when dozens of customers have already had the same bad experience.

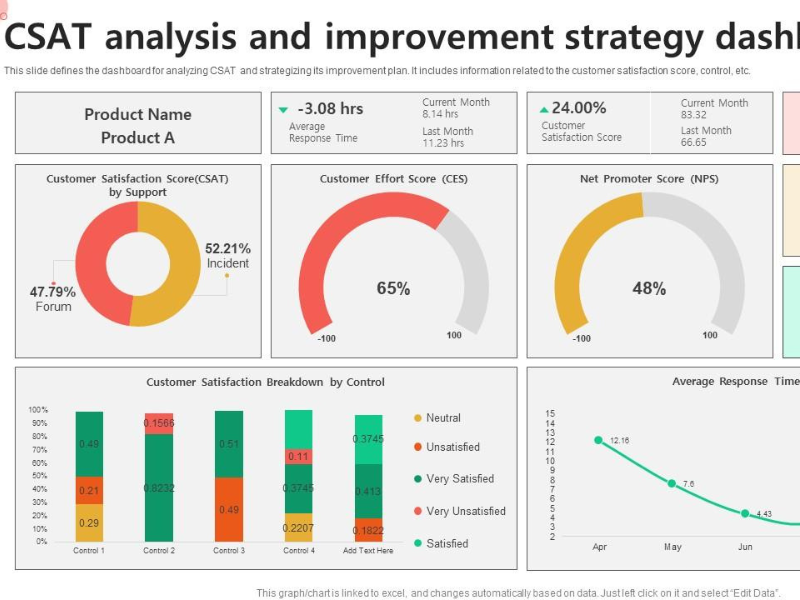

Unclear Reasons for Low Scores → CSAT Analytics & Segmentation

A low CSAT score alone is useless.

Analytics tools break scores down by:

- Channel (chat, email, phone).

- Agent or team.

- Customer segment or plan.

- Feature or workflow touched.

Segmentation reveals patterns. For example, low CSAT from new users may point to onboarding friction, not support quality.

Dashboards turn guessing into diagnosis.

Delayed Response to Negative Feedback → Workflow Automation

Speed matters more than perfection when satisfaction drops.

CSAT improvement software automates responses by:

- Alerting managers instantly on low scores.

- Assigning follow-up tasks with SLAs (response time targets).

- Routing issues to product, support, or success teams.

This reduces silent churn and shows customers their feedback matters.

Inconsistent Support Quality → QA Frameworks & Performance Tracking

CSAT data feeds quality assurance directly.

Teams use scorecards to:

- Correlate CSAT with agent behaviors.

- Identify coaching opportunities.

- Track improvements over time.

Consistency improves when feedback is visible and measurable.

Essential Features to Look for in CSAT Improvement Software

CSAT Analytics and Reporting

1. Where is CSAT changing?

Generic dashboards show overall scores: ‘CSAT dropped from 82% to 78% last month.’ Helpful, but not actionable.

Effective analytics break this down:

- Which team/agent is driving the drop? (Agent A: 90% → 85%; Agent B: 75% → 65%)

- Which channel is underperforming? (Email: stable at 85%; Chat: dropped from 80% to 70%)

- Which customer segment is affected? (Enterprise accounts: 88%; SMB accounts: 72%)

- What time patterns exist? (Morning shifts: 82%; Evening shifts: 74%)

This segmentation turns ‘we have a problem’ into ‘we have a chat quality problem on evening shifts affecting SMB customers.’

2. Why is CSAT changing?

Surface-level data shows the drop. Root cause analysis reveals why.

Good analytics correlate CSAT with behaviors and outcomes:

- First-contact resolution rate: When agents resolve issues on first contact, CSAT averages 4.2/5. When customers must follow up, CSAT drops to 2.8/5.

- Response time: Tickets answered within 2 hours score 4.1/5 on average. Those answered after 24 hours score 3.2/5.

- Agent actions: Tickets where agents provided a workaround but no permanent fix score 3.4/5. Those with complete resolution score 4.5/5.

This reveals that CSAT isn’t dropping because agents are rude—it’s dropping because evening-shift agents lack authority to issue refunds or escalate to engineering, forcing customers to follow up the next day.

3. What happens if we don’t act?

The most valuable analytics connect CSAT to business outcomes, particularly churn.

Churn correlation example:

- Customers who rate 5/5: 5% churn within 90 days

- Customers who rate 4/5: 8% churn within 90 days

- Customers who rate 3/5: 22% churn within 90 days

- Customers who rate 1-2/5: 45% churn within 90 days

This quantifies the cost of inaction. If you have 100 customers rating experiences 1-2/5 this month, expect to lose 45 of them in the next quarter unless you intervene. At $1,200 average annual contract value, that’s $54,000 in at-risk revenue.

What ‘Enough’ Looks Like:

Static monthly reports (‘CSAT was 78% in March’) don’t enable action. Dynamic analytics that update in real-time, segment by multiple dimensions, and correlate with churn give teams the insight to act before it’s too late.

Survey Creation and Customization

Effective surveys are:

- Short and targeted.

- Triggered by behavior, not calendars.

- Customizable by channel and segment.

Flexibility here directly affects response quality.

Omnichannel Feedback & Support Integration

CSAT data is most useful when connected to:

- Help desks.

- CRMs.

- Chat and messaging tools.

Integration eliminates manual context-switching.

Feedback Management & Action Workflows

Feedback without action kills trust.

The software should support:

- Ownership assignment.

- Status tracking.

- Closed-loop follow-ups.

Execution matters more than insight.

Automation and AI Capabilities

AI features help with:

- Sentiment detection (emotion in text).

- Trend prediction.

- Response prioritization.

Useful, but not a replacement for clear processes.

What CSAT Improvement Software Can and Cannot Fix

Poor visibility into customer sentiment:

Software centralizes feedback from all channels into one dashboard, eliminating the need to manually check email surveys, chat logs, in-app ratings, and review sites separately. You see the full picture in real time.

Slow reaction to dissatisfaction:

Automated workflows route negative feedback to the right team within minutes instead of days. A customer rating 2/5 at 10 AM can receive a personal response by 2 PM the same day—often preventing churn before it happens.

Inconsistent follow-up processes:

When follow-up depends on individual agents remembering to reply, some customers get responses and others don’t. Software enforces consistency by creating tasks, setting SLAs, and tracking completion rates—ensuring every piece of negative feedback gets addressed.

Fragmented feedback data:

Instead of scattered insights across multiple tools, software aggregates CSAT data with CRM records, support tickets, and product usage—giving you context. You see not just that a customer is unhappy, but who they are, what they purchased, which features they use, and what problems they’ve reported before.

What Software Alone Cannot Fix:

Broken product experiences:

If your product has fundamental usability issues or missing features customers need, no amount of survey optimization will fix that. Software will surface these problems faster—but you still need product teams to prioritize and ship improvements.

Misaligned incentives or culture:

If support agents are measured only on ticket volume, not satisfaction, they’ll optimize for speed over quality. If leadership doesn’t prioritize customer feedback, survey data sits unused. Software provides the infrastructure for improvement, but culture determines whether teams act on it.

Undertrained teams:

Automated workflows can route a complaint to an agent, but if that agent doesn’t know how to de-escalate angry customers or lacks authority to issue refunds, the customer still has a bad experience. Software makes training gaps visible—it doesn’t replace training.

Chronic under-resourcing:

If you’re running a 500-ticket-per-day support queue with three agents, automation will help you work more efficiently—but you’re still fundamentally under-resourced. Software buys time by prioritizing urgent issues, but it can’t replace hiring when workload exceeds capacity.

The Right Perspective:

CSAT improvement software amplifies good systems and exposes weaknesses in bad ones. If your processes are solid but execution is inconsistent, software will dramatically improve results. If your processes are broken, software will show you exactly where they’re failing—giving you the data to fix them strategically rather than guessing.

CSAT Software vs Manual CSAT Improvement

| Аспект | Manual | Software |

|---|---|---|

| Speed | Slow | Real-time |

| Scale | Ограниченный | Высокий |

| Consistency | Variable | Standardized |

| Insight | Shallow | Context-rich |

How to Choose the Right CSAT Improvement Software

Align Features With Your CSAT Goals

Start with your biggest CSAT leak.

- Support-driven issues need strong help desk integration.

- Product-driven issues need in-app surveys and segmentation.

- Retention-driven goals need churn correlation.

Buy for outcomes, not features.

Evaluate Ease of Use and Adoption

If teams avoid the tool, CSAT won’t move.

Проверьте:

- Setup time.

- Daily workflow fit.

- Cross-team visibility.

Adoption beats sophistication.

Check Integration With Existing Tools

The best CSAT tool fits into your stack, not replaces it.

CSAT, NPS, and CES: How They Work Together

| Метрика | Focus | Use Case |

|---|---|---|

| CSAT | Interaction satisfaction | Short-term fixes |

| NPS | Loyalty | Long-term growth |

| CES | Effort | Friction reduction |

Used together, they provide a complete picture.

Typical CSAT Benchmarks and Expectations

- Most industries fall between 70–85%.

- SaaS often targets 80%+.

- Improvement is usually incremental, not dramatic.

Consistency matters more than chasing perfect scores.

FAQ – Frequently Asked Question

What is the best software to improve customer satisfaction scores?

The best CSAT improvement software depends on your primary pain point. If slow response to negative feedback hurts you most, prioritize workflow automation. If low scores lack context, focus on analytics and segmentation. There is no universal “best” tool—only the best fit for your CSAT goals.

How fast can CSAT improvement software impact results?

Most teams see operational improvements within weeks. Measurable CSAT gains usually follow within one to two quarters, depending on volume and issue complexity.

Is CSAT improvement software only for support teams?

No. Product, success, and operations teams benefit equally. CSAT reflects the entire customer journey, not just support interactions.

Заключение

CSAT improvement software works when it turns feedback into action, not reports. It gives teams speed, clarity, and accountability—three things manual processes can’t sustain. If your CSAT is stuck, the problem is rarely effort. It’s structure. Re-evaluate how feedback flows today, then choose software that fixes that flow end to end.

FAQs – Frequently Asked Questions

What is CSAT improvement software?

CSAT improvement software is a solution designed to help businesses measure, analyze, and improve customer satisfaction scores efficiently. It uses tools like automated surveys, analytics dashboards, and feedback workflows to identify pain points and implement changes that enhance customer experience.

How does CSAT improvement software work?

CSAT improvement software helps track customer feedback through surveys, analyze data for actionable insights, and automate tasks such as follow-ups or workflow routing. By identifying trends and addressing issues quickly, it turns feedback into tangible improvements in satisfaction scores.

What features should I look for in CSAT improvement software?

Key features include:

- Advanced analytics: Measure CSAT, churn rates, and trends.

- Customizable surveys: Tailored for specific customer interactions.

- Omnichannel integration: Collect feedback across all channels.

- Workflow automation: Manage responses and escalate issues seamlessly.

- AI capabilities: Predict behavior and identify sentiment patterns.

Can CSAT improvement software help my team reduce churn rates?

Yes, improving CSAT scores often correlates with reduced churn. The software provides insights into dissatisfied customers, enabling proactive engagement strategies, enhanced service quality, and solutions tailored to customer needs—all of which foster loyalty.

Is CSAT improvement software suitable for small businesses?

Absolutely. Many CSAT software solutions are scalable and cater to businesses of all sizes. Small businesses benefit from streamlined customer feedback processes, automated workflows, and actionable insights that enhance customer loyalty without requiring large teams.

How do I choose the right software to improve CSAT?

- Align features to goals: Ensure the software addresses your specific challenges, such as feedback collection or analytics.

- Evaluate usability: Look for intuitive tools that simplify onboarding for your team.

- Check integrations: Ensure compatibility with your current CRM or help desk solutions.

Choosing software that meets these criteria ensures measurable outcomes quickly.

Can CSAT improvement software guarantee higher satisfaction scores?

While software provides essential tools to improve CSAT, the ultimate success depends on factors like product quality, employee training, and company culture. The software enhances processes but doesn’t replace the need for customer-centric strategies.

How does CSAT software differ from traditional feedback tools?

Traditional tools focus on collecting feedback, while CSAT improvement software analyzes feedback, automates responses, and provides actionable insights. It uses real-time tracking and predictive capabilities to proactively address issues and optimize customer interactions.

Читать далее:

Call Center KPIs for Customer Experience: What to Track

Call Center Challenges Solutions: Practical Performance Fixes